In this post, we will see what Ollama is and how we can use it to run local LLMs.

But first of all, what is Ollama?

“Ollama is an open-source tool designed to run and manage large language models directly on our local machine. We can think of it as Docker, but specifically for LLMs. It packages model weights, configurations, and dependencies into a single, portable format, allowing us to run powerful models like Llama 2, Mistral, and more, right from our laptop.“

For those who use LLM on a daily basis, Ollama can provide many valuable benefits such as:

- Run models privately: Our data never leaves our local machine, offering better control over data privacy.

- Experiment with various models: Easily download and test different open-source LLMs like LLaMA, Code Llama, or other community-built variants.

- Fine-tune settings: Fine-tune models to our specific needs, experiment with different parameters, and have complete control over the AI engine powering your applications.

- Offline Access and Reliability: Our LLMs are available anytime, anywhere even in offline environments.

For all information about Ollama, this is the official web site: https://ollama.com/

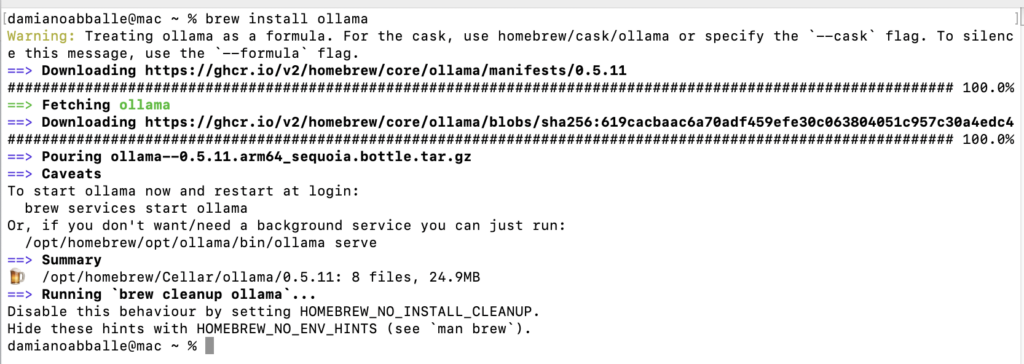

Let’s see how to install Ollama (in my case I have macOS) and how to use it:

We start to open a Terminal and run the command to download and install Ollama:

brew install ollama/tap/ollama

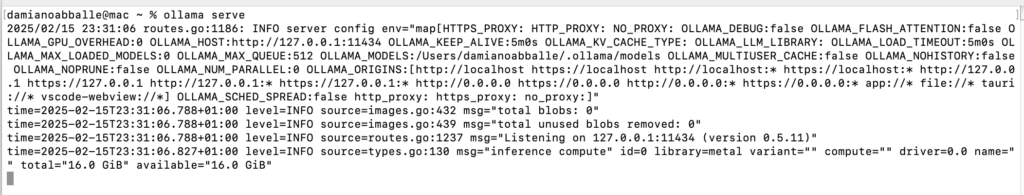

Then, we use the following command to run Ollama:

ollama serve

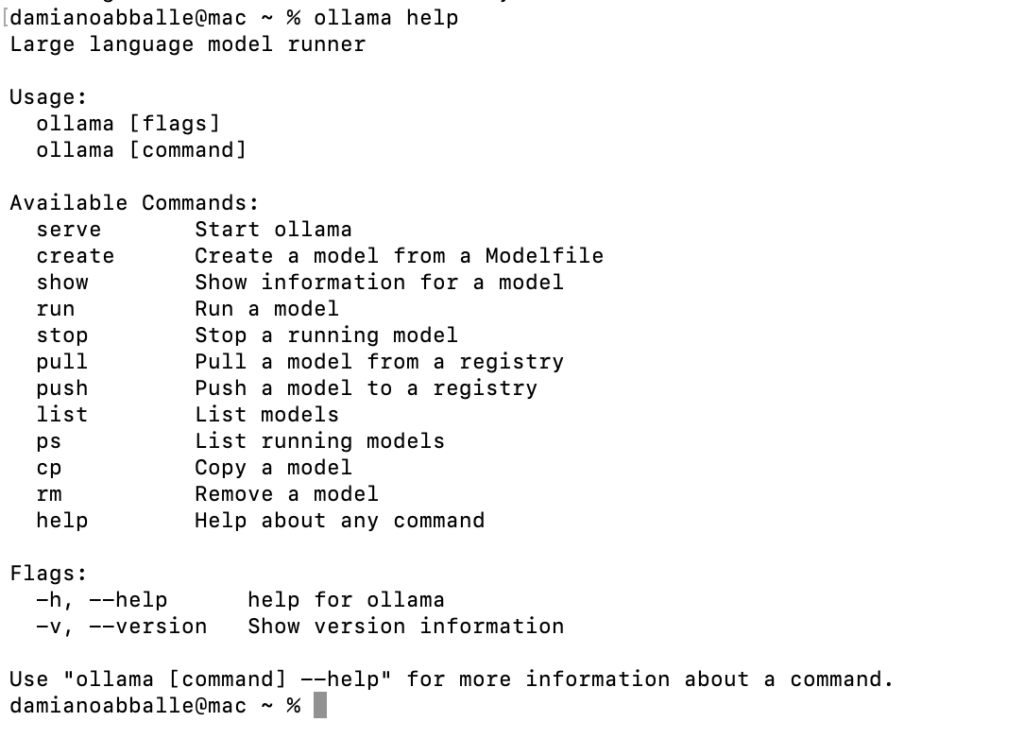

Finally, to see all the Ollama commands, we execute the command:

ollama help

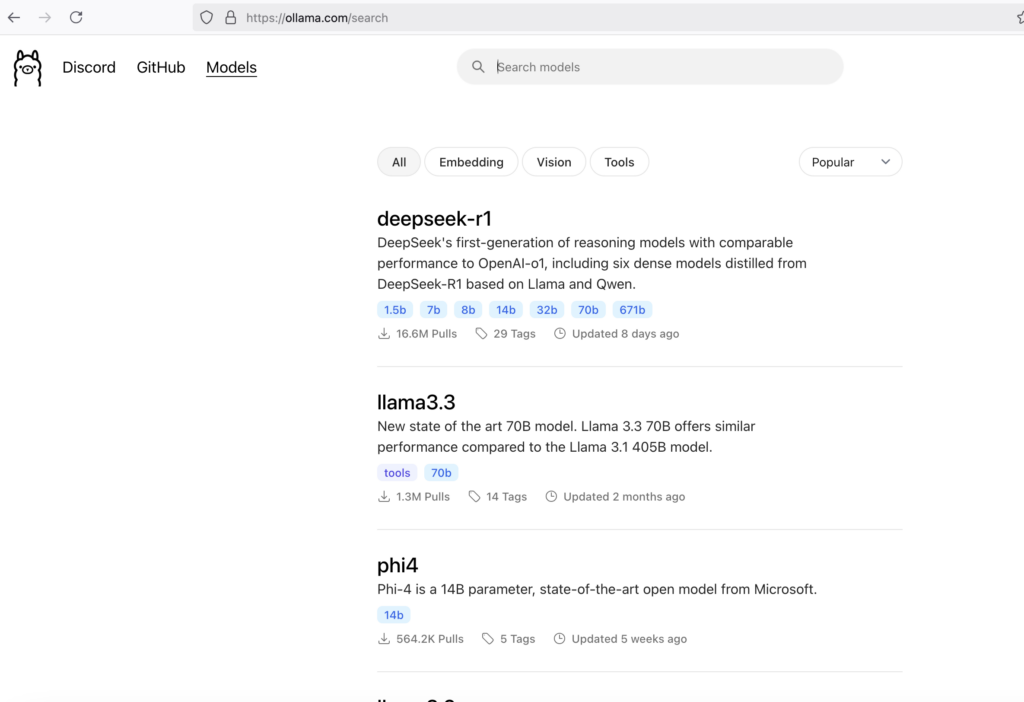

The next step is to go on the Ollama web site https://ollama.com/search, to find the list of all LLMs to download:

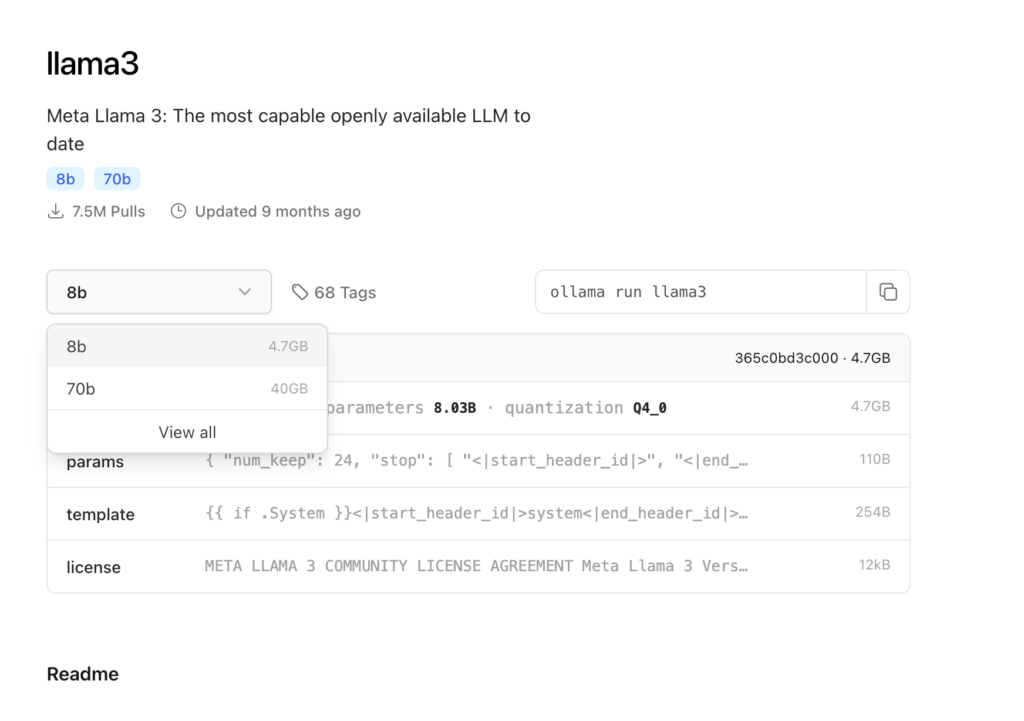

If we select one, we can see the different versions and the command to download it:

In this case, for the LLM llama3, we can download the version with 8 bilions of parameters or 70 bilions of parameters.

Obviously, this parameter will define the size of the download.

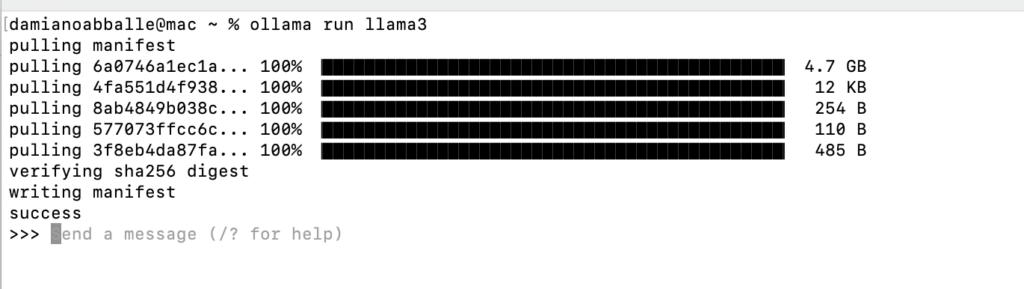

If we decide to download and run this LLM, we run the command:

ollama run llama3

We have done and now, we are able to use this LLM.

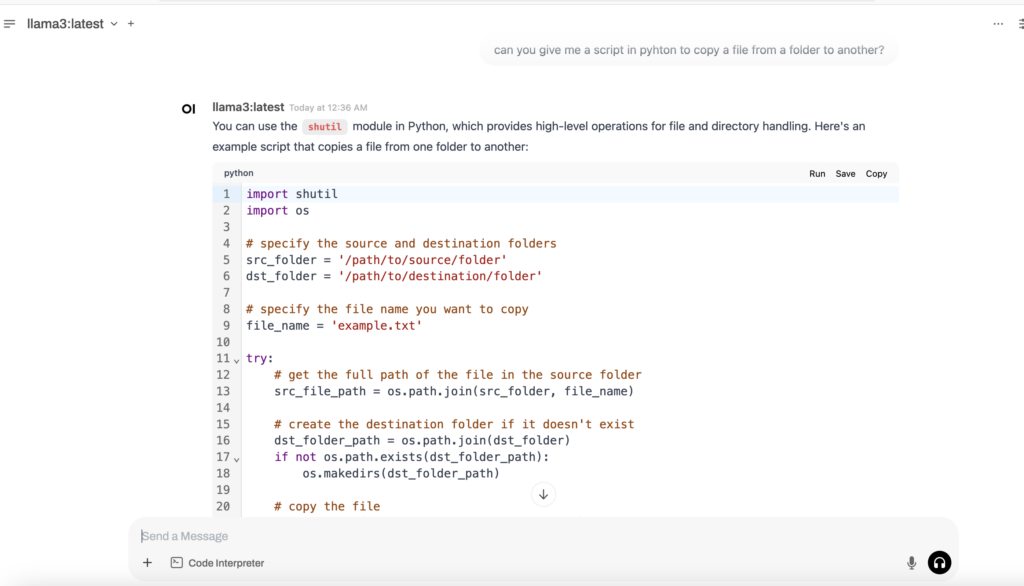

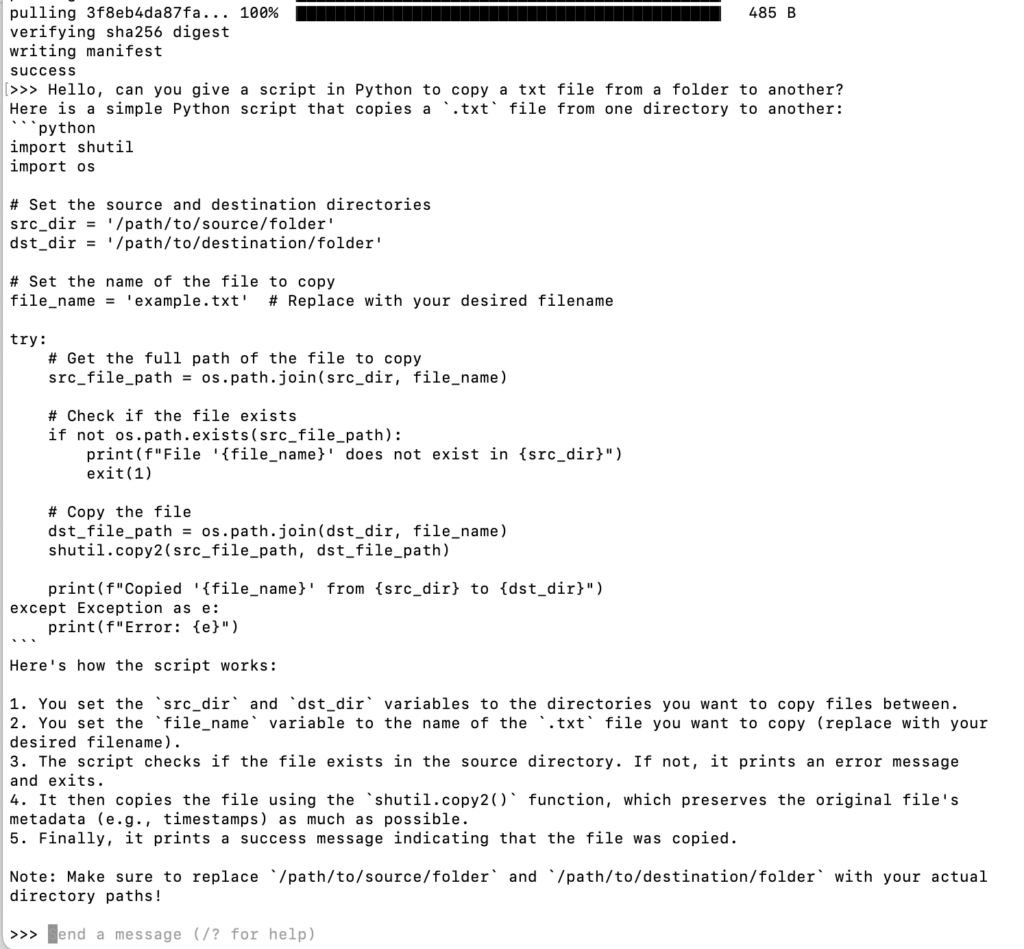

Let’s try to ask him to give us a Python script to copy a file from one folder to another:

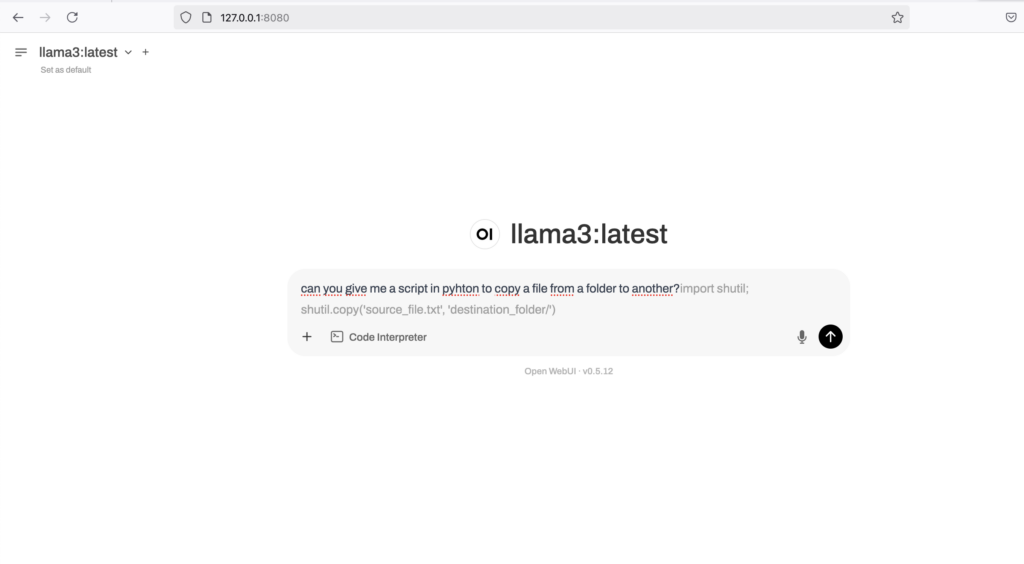

It is clear that using Ollama in the terminal can be quite difficult compared to the UI of major AIs like ChatGpt, Claude, Gemini and so on.

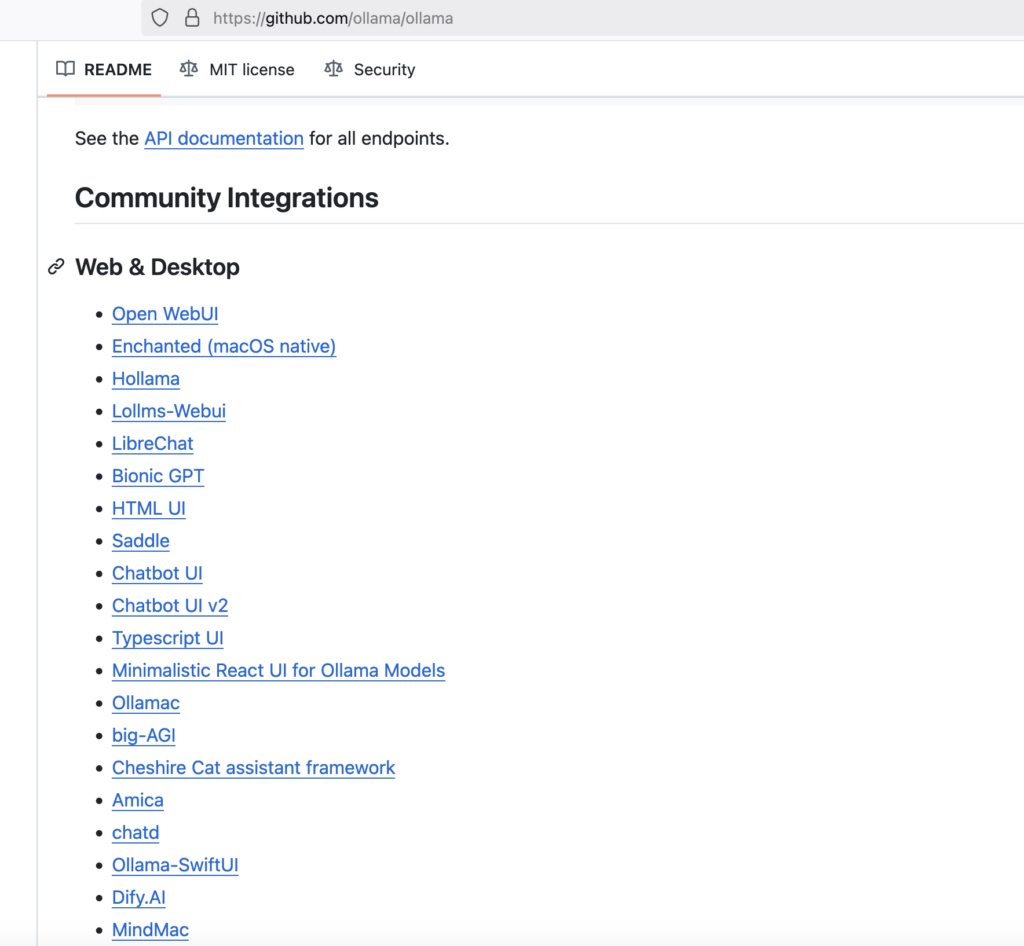

Fortunately, there are many UI projects that can help us and, we can find a long list in the page:

https://github.com/ollama/ollama

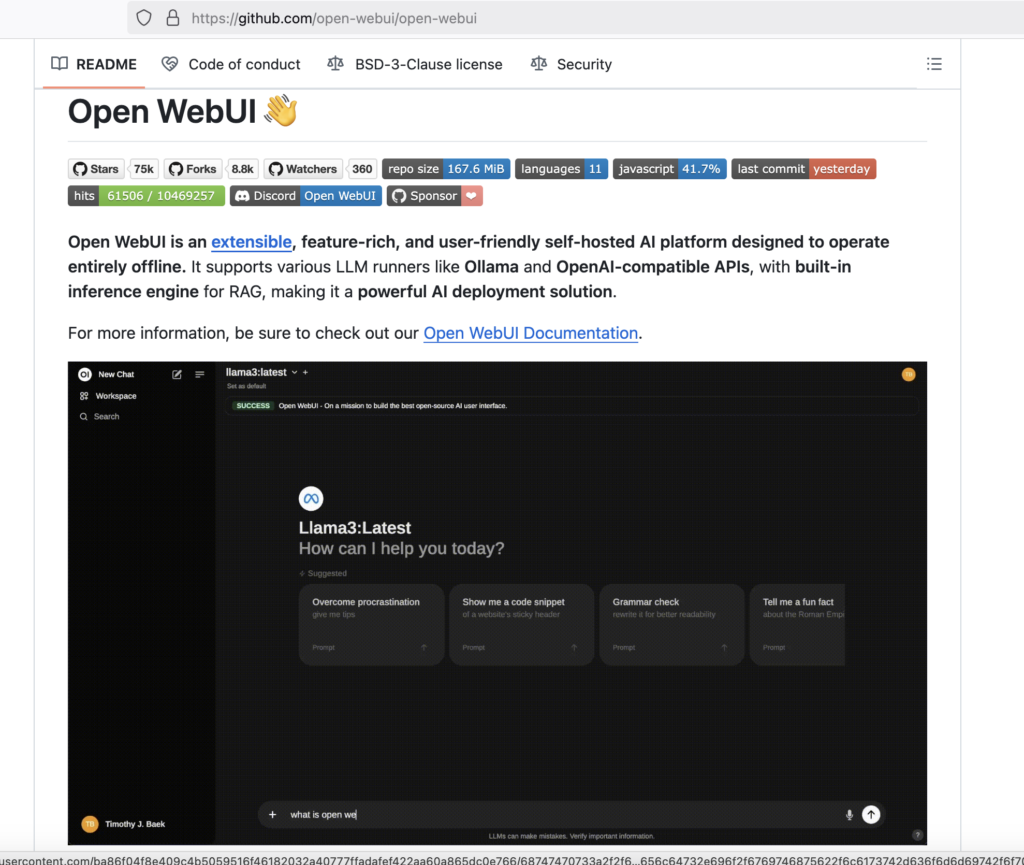

One of them that I recommend is Open WebUI:

I like it because the user interface is very nice and especially because it is very easy to install via Docker.

Let’s see how to install it:

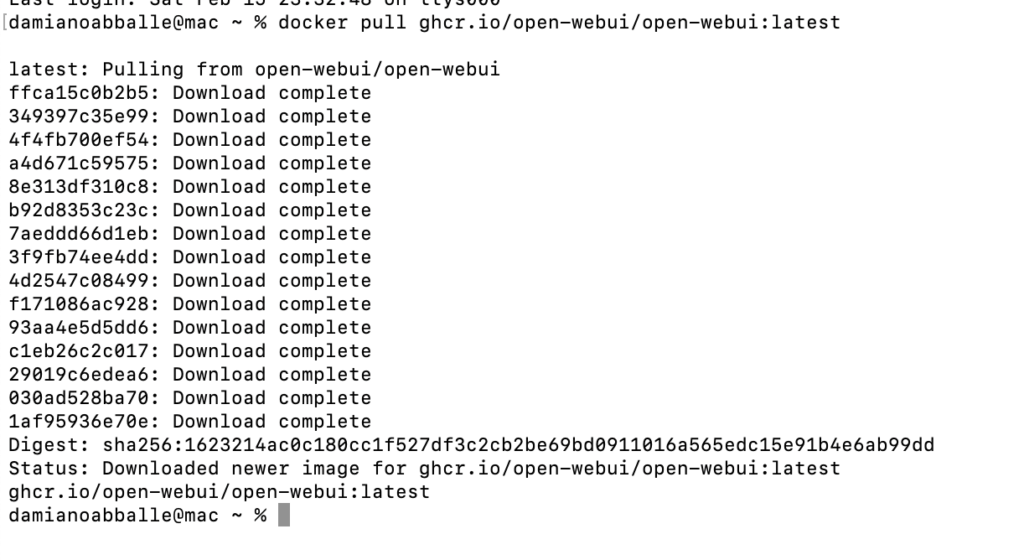

First, let’s extract the docker image with the command:

docker pull ghcr.io/open-webui/open-webui:latest

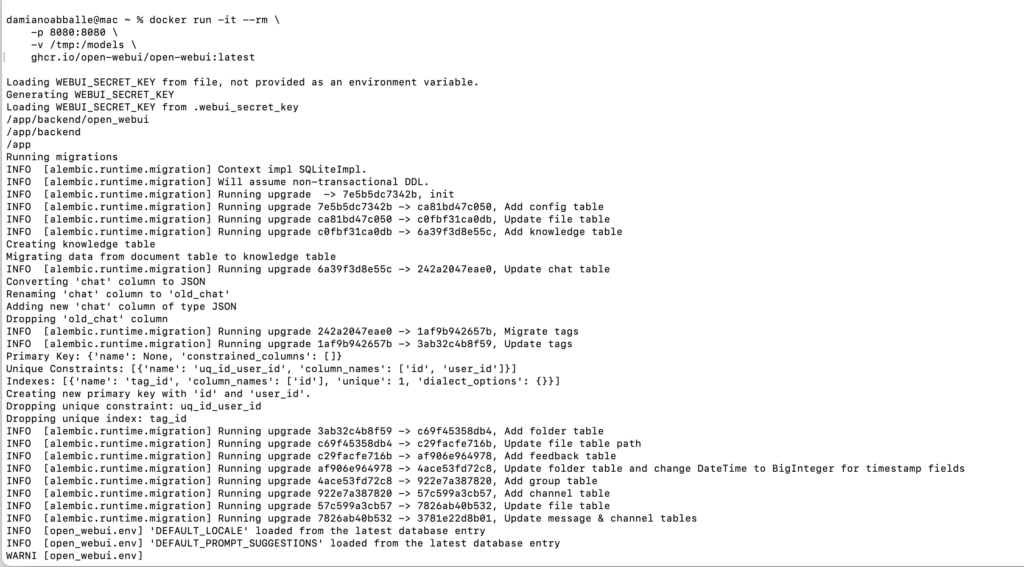

Then, we run the Docker Container:

docker run -it --rm \

-p 8080:8080 \

-v /tmp:/models \

ghcr.io/open-webui/open-webui:latest

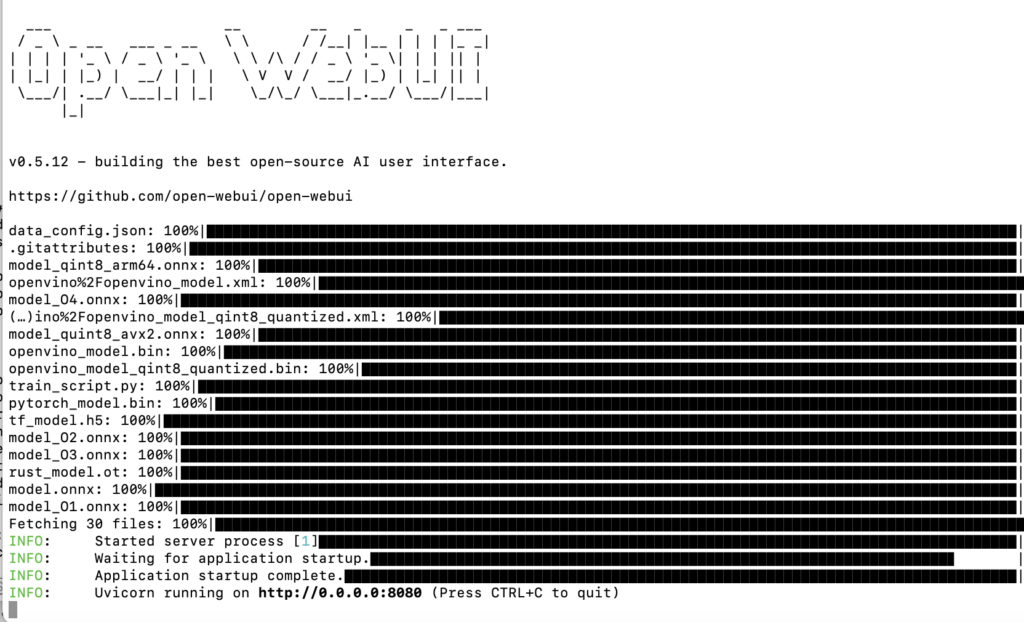

We have done and now, we are able to use it.

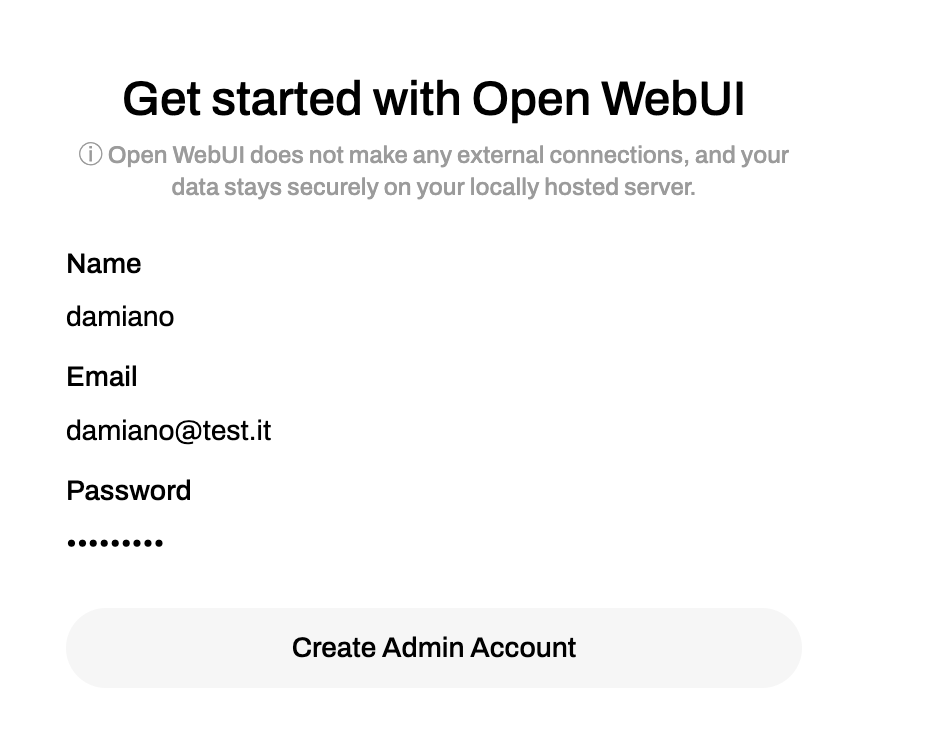

If we open a browser and navigate to http://localhost:8080 we will see the interface load.

From there, after a breef configuration, we will be able to use in local, all LLMs that we’ve downloaded: